In the world of imaging and optics, achieving clarity and precision is paramount. Whether it’s for quality control, machine vision, or barcode reading, understanding the intricacies of image resolution plays a vital role in ensuring the success of various applications. In this blog post, we delve into an insightful email exchange between us and one of our clients as they unravel the complexities of calculating object resolution on a sensor. Through a series of questions and answers, we explore the fundamental concepts, challenges, and critical factors that influence image resolution.

Question 1: What is the importance of calculating the resolution of an object on a sensor in imaging?

Answer: Calculating the resolution of an object on a sensor is crucial in imaging as it helps determine how well an imaging system can capture and represent details of an object. This information is vital for various applications, including quality control, machine vision, and barcode reading.

Question 2: How is the magnification of the imaging lens related to calculating object resolution?

Answer: The magnification of the imaging lens (M) plays a key role in calculating the object’s resolution. The formula for magnification is M = h’/h, where h’ represents the horizontal sensor size, and h is the horizontal field of view (FOV). Understanding the magnification allows us to determine the size of the object image on the sensor.

Question 3: Once we know the magnification, how can we calculate the size of the object image on the sensor?

Answer: To calculate the size of the object image on the sensor, you can use the formula S’ = S * M, where S’ represents the imaged object size on the sensor, S is the actual object size, and M is the magnification of the lens.

Question 4: How can the imaged size of an object on the sensor be converted to spatial frequency?

Answer: You can convert the imaged size of an object on the sensor to spatial frequency (lp/mm) using the formula F = 1/(2 * S’), where F represents spatial frequency in line pairs per millimeter (lp/mm), and S’ is the imaged object size in millimeters.

Question 5: Is it possible to resolve an image with just 1-pixel resolution with 100% probability?

Answer: No, it is not possible to resolve an image with only 1-pixel resolution with a 100% probability. The ability to resolve objects depends on factors like contrast and pixel intensity. In cases where all pixels have the same intensity, contrast is minimal, making it challenging to distinguish objects.

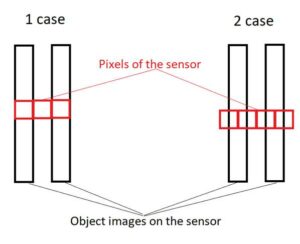

Question 6: Can you explain the difference between Case 1 and Case 2 in image resolution?

Answer: In Case 1, objects can be resolved because pixels have different intensities, creating enough contrast to distinguish the objects. In Case 2, the objects cannot be resolved because all pixels have the same intensity, resulting in zero contrast.

Question 7: What is the Nyquist-Shannon theorem, and how does it relate to image resolution?

Answer: The Nyquist-Shannon theorem states that to accurately represent an object, you need at least two pixels per object. For color sensors, the Nyquist frequency is halved, meaning you would need more pixels per object to achieve the same level of detail. This theorem plays a crucial role in understanding the limitations of sensor resolution.

Question 8: How does the color filter in a sensor impact its real resolution?

Answer: In a color sensor, the use of color filters (e.g., RGBG) means that four pixels are used to create one pixel of the color image. Consequently, the real resolution of the sensor is reduced by a factor of two compared to a monochrome sensor.

Question 9: How do lens specifications and sensor resolution relate to meeting the requirements for reading 5mil codes?

Answer: Lens specifications, such as the modulation transfer function (MTF), and sensor resolution are critical factors in meeting the requirements for reading 5mil codes. The MTF of the lens and the sensor’s pixel pitch determine if the system can resolve fine details like 5mil codes at different working distances.

Question 10: What are the challenges in ensuring the lens and sensor can meet the specified requirements for code reading?

Answer: Challenges in meeting code reading requirements include ensuring that the lens’s MTF is sufficient for the desired spatial frequency and accounting for sensor limitations, especially in color sensors where the Nyquist frequency is reduced. Tolerances and real-world conditions must also be considered to determine if the system can reliably read codes.

In the realm of imaging technology, the journey to perfecting image resolution is a continuous pursuit. I hope this email exchange between us and our client has shed light on the intricacies involved in this endeavor. From understanding the importance of magnification to navigating the challenges posed by color sensors and real-world conditions, we’ve explored the critical elements that shape our ability to capture the finest details. As technology advances and new innovations emerge, it’s clear that the quest for sharper, clearer images will persist. With each insight gained and every question answered, we move one step closer to unlocking the full potential of imaging systems.